ACE Breakthrough: The Rise of ContextOps and Its Impact on AI Coding

In October 2025, researchers from Stanford and SambaNova Systems released a breakthrough paper:

Agentic Context Engineering: Evolving Contexts for Self-Improving Language Models.

This paper redefines how AI systems should learn, evolve, and apply context.

It marks a shift from prompt engineering to context engineering as the foundation of high-performing AI agents.

And this is precisely the frontier Packmind was built for.

From Static Prompts to Evolving Contexts

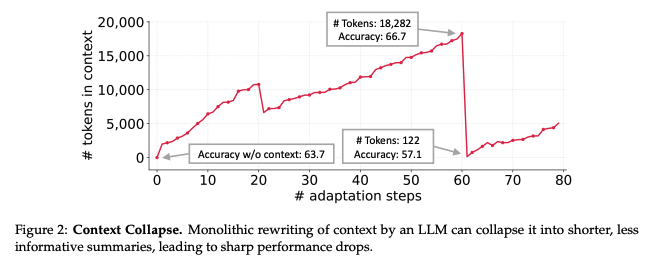

Modern LLM systems don’t just need better prompts, they need evolving playbooks. Traditional prompt optimization compresses everything into a few clever sentences. ACE shows this brevity bias actually kills performance. Worse: when models repeatedly rewrite or regenerate context, they start to forget. The paper calls this context collapse (Page 3).

ACE solves this by treating context as a living code:

- Generate → try strategies on real tasks

- Reflect → analyze what worked

- Curate → update context incrementally, without wiping previous knowledge

It’s modular, agentic, and self-improving — very similar to how engineering teams maintain and refine standards over time.

The Core Idea: Context as a System, Not a String

ACE breaks context into structured units (“bullets”), each containing:

- a rule or insight

- metadata (success rate, relevance, last update)

- scope and retrieval cues

Instead of one giant prompt, the model retrieves and refines just the pieces it needs.

This has huge implications for AI coding:

- You fine-tune context systems, not model weights

- You persist learnings across sessions

- You evolve context through small, interpretable updates

This is exactly the conceptual foundation of ContextOps.

Why This Matters for Context Engineering in AI Coding

Engineering teams using Claude, Copilot, Cursor, Kiro, etc. don’t want to rewrite prompts for each task. They want AI assistants that understand their architecture, follow their patterns, enforce consistency, and improve over time.

ACE confirms what teams feel intuitively:

- Rich, evolving context ≫ static prompts

- Incremental updates reduce drift and latency by up to 86%

- Well-engineered context can push open models to near GPT-4-level performance

Context, not model size, is becoming the real performance frontier.

What It Means for Packmind

ACE formalizes what Packmind has been implementing for one year.

Packmind enables developers to create, scale, and govern their engineering playbook — built on the same first principles ACE validates:

| ACE Concept | Definition | Packmind Implementation |

|---|---|---|

| Evolving Playbooks | Context grows over time through incremental improvements, not static prompts. | ✅ Standards, Rules & Recipes act as a continuously evolving engineering playbook. |

| Reflection & Curation Loops | The Generator → Reflector → Curator agentic cycle integrates useful insights back into context. | ✅ Code changes and AI outputs analysis → capture of emerging patterns → playbook update suggestions for human validation. |

| Delta Updates | Context evolves through localized delta entries — incremental adds or edits merged without rewriting the whole prompt . | ✅ Incremental updates and sync between local and shared playbooks. |

| Context-Aware Governance | An oversight layer that ensures the evolving context remains aligned, deduplicated, and reliable through semantic checks and refinement. | ✅ Drift detection, consistency analysis, and Governance Pane to validate rules before distribution. |

In essence:

ACE shows that evolving, structured, governed context outperforms static prompts and can reach fine-tuning-level performance without retraining.

Packmind makes those principles operational, bringing agentic context engineering to every repo, IDE, and team.

The Broader Shift: ContextOps as the New DevOps

ACE marks the beginning of a shift from “prompt engineering” to Context Engineering — and from isolated AI agents to compound, self-improving Context.

Just as DevOps unified code, deployment, and monitoring, ContextOps will unify context creation, validation, and distribution across teams and AI assistants.

Packmind’s mission aligns perfectly:

Create, scale, and govern the engineering playbook behind your AI code

Why It’s a Big Deal

ACE isn’t just an academic benchmark win.

It’s proof that context is a programmable, governable layer of intelligence — something that can be versioned, audited, and evolved collaboratively.

For Packmind, this means:

- Validation: the architecture we’ve been building has formal backing from top research labs.

- Direction: our next step is enabling automated reflection and curation directly inside coding environments.

- Momentum: the industry is converging on context-centric architectures, and Packmind already leads the developer-facing implementation with its open source.

Final Thought

In the future, AI agents won’t be “prompted.”

They’ll be context-engineered.

ACE shows how.

Packmind is building where it happens.

📘 If you want the practical side, read our “Context Engineering 101” guide or explore the Packmind open-source repo to start building your own evolving engineering playbook.