How Mature Is Context Engineering in Open Source? A Study of 10,000 Repos

Context engineering means designing the information, rules, and tools you give to AI agents to guide how they work and what they produce. Better context leads to better results from your agent (and is less costly than fine-tuning a model).

In practice, it means (most of the time) placing markdown files in specific locations in a codebase so that AI Agents can load and use them.

In early 2026, I was curious about how mature open-source projects are in context engineering. So I made a brief experiment to get an overview.

Setup and dataset

I’ve randomly selected 10,000 GitHub projects that:

- Have been updated since November 1st, 2025, to focus on active projects.

- Have more than 2,000 stars

- Don’t contain keywords like “awesome”, “list”, “interview” to keep only actual projects with code.

For each repo, I’ve looked for specific files used by coding agents (ex: CLAUDE.md, …) to identify what kind of agents were supported.

Here is the list of agents I’ve searched for:

- Claude Code

- GitHub Copilot

- Cursor

- AGENTS.md files (not an agent, but a shared standard for customizing agents)

- Gemini

- Kiro

- KiloCode

- Cline

- Continue

- Windsurf

- Codex

Note: All agents above, except Claude Code and Continue, support AGENTS.md as well as their agent-specific artefacts.

For Claude Code, GitHub Copilot and Cursor, I’ve searched for all the artefacts they support (rules, commands, subagents, skills, hooks, …).

🔍 Usage of AI Agents artefacts

Key Takeway #1: Open Source projects are not yet mature with context engineering

Over the 10,000 repos, only 978 repos (9,78 %) have at least one file linked to an AI Coding Agent. We could argue it’s not that bad, but these numbers don’t reflect the current use of AI in software engineering across the industry.

In the 2025 Stack Overflow survey, 84% of respondents are using or planning to use AI tools in their development process. In my opinion, OSS maintainers should invest in setting up these artefacts to improve the developer experience of their contributors.

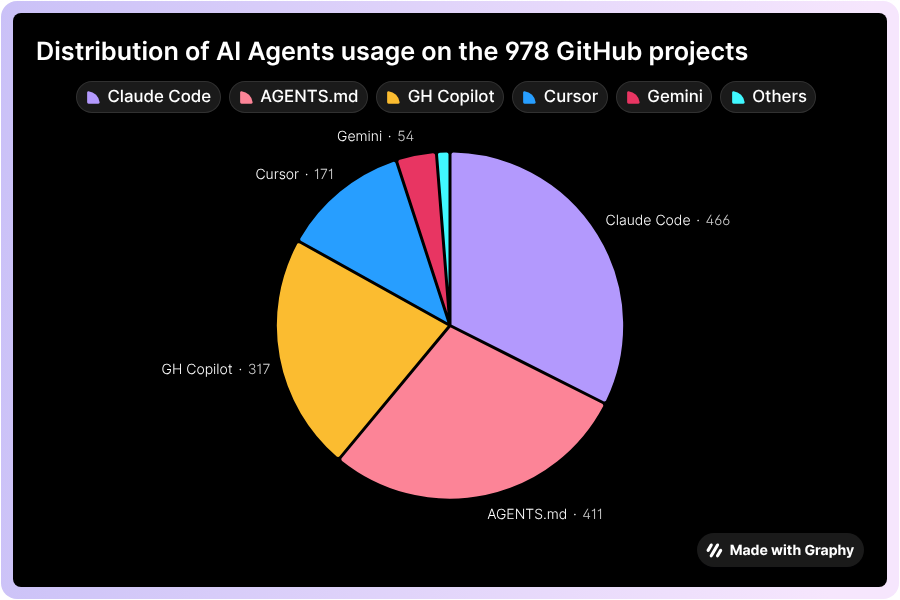

Key Takeway #2: Claude Code leads the race

Claude Code is used by 466 repos, followed by the standard AGENTS.md, GitHub Copilot, and finally Cursor.

Only 18 repos use one of the following agents artefacts: Cline, Codex, Kiro, Continue, KileCode. This is understandable, in the sense that it’s more relevant to use popular or standardized artefacts (AGENTS.md) to ensure any contributor will benefit from them.

It’s also interesting to notice that 471 repos (almost half of the targeted repos) use artefacts from multiple agents (2 or more). The most represented couple is AGENTS.MD + Claude.MD (89 repos).

👀 A deeper analysis of the artefacts

1. Claude Code artefacts

Key Takeway #3: Skills have a huge impact in context engineering

If we look more carefully into the Claude Code artefacts deployed, we observe, unsurprisingly, the usage of CLAUDE.md files in almost all the repos (449 out of 466), but we also see that 85 repos have at least one slash command, which are reusable prompts for repetitive tasks.

Released mid-October 2025, Claude Skills became quite popular, with already 44 repos using them. Hooks are less used. I bet that in 3 months, if I rerun this experiment, we’ll have more repos using skills than subagents.

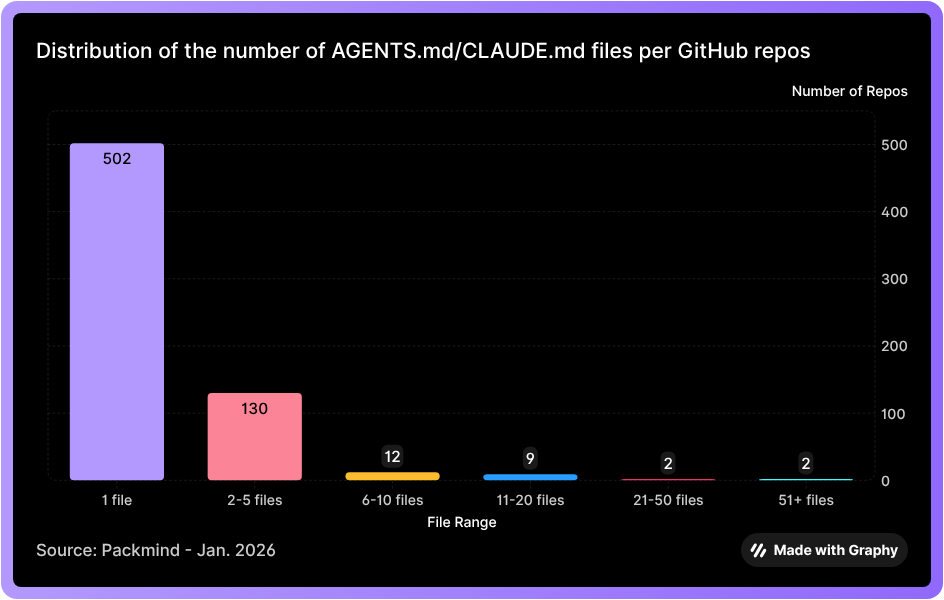

🌲 2. Usage of nested artefacts

AGENTS.md and CLAUDE.md files can be nested in different parts of the projects to bring relevant context only where needed. This avoids loading a large file into the context window for every agent task, instead letting the agent load only the appropriate files based on the locations required for the task.

The majority of projects using either or both AGENTS.md/CLAUDE.md (75,04%) have only one file in their repo, so most don’t use nested files.

It’s hard to determine the optimal number of files, since it depends on the project’s context, structure, and architecture. But in many cases, more than one file can make sense.

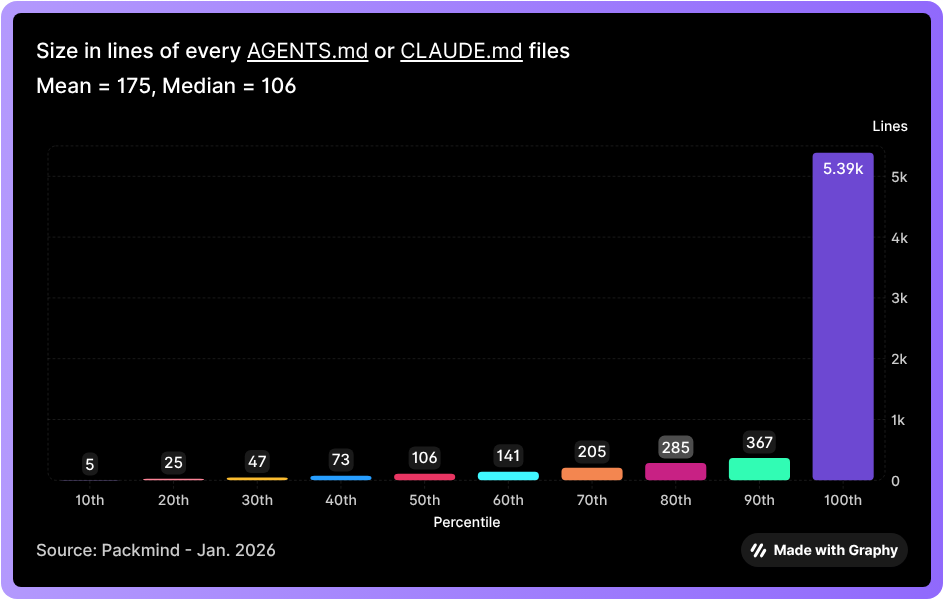

3. 📏 AGENTS.md and CLAUDE.md size

I was also curious about the length of these AGENTS.md and CLAUDE.md files.

The mean size is 175 lines, with a median value of 106. I’ve only looked at the number of lines, but I could have gone further by also looking at the length of the lines.

Here is the decile distribution:

5,39 kilo-lines looks definitely too much IMHO. But the vast majority have a reasonable size. A more qualitative dive would help to assess the quality of the content, not just its size.

I’ve also looked at a few AGENTS.md files to understand their structure and patterns. That will definitely deserve a dedicated blog post, but I’ve found some content like this that could be improved:

### Code Style

1. **Python**: Follow existing patterns; minimize comments; keep variable names shortThe “Follow existing patterns” instruction lacks clarity for AI Agents. It’s more understandable by human developers, I’d say.

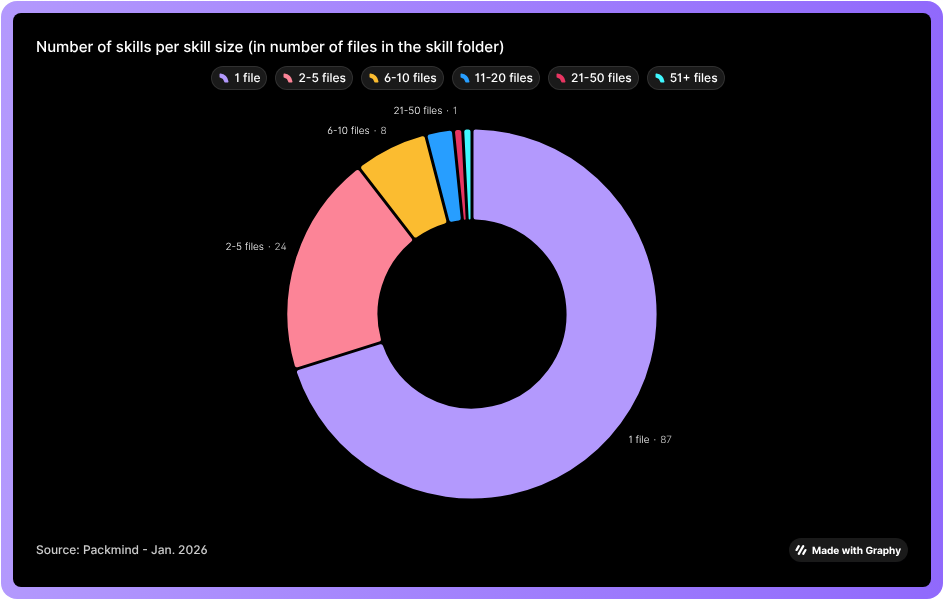

4. 🧠 Skills usage

A skill is a folder containing SKILL.md and additional files and resources, as defined in the Agent Skills standard released at the end of 2025.

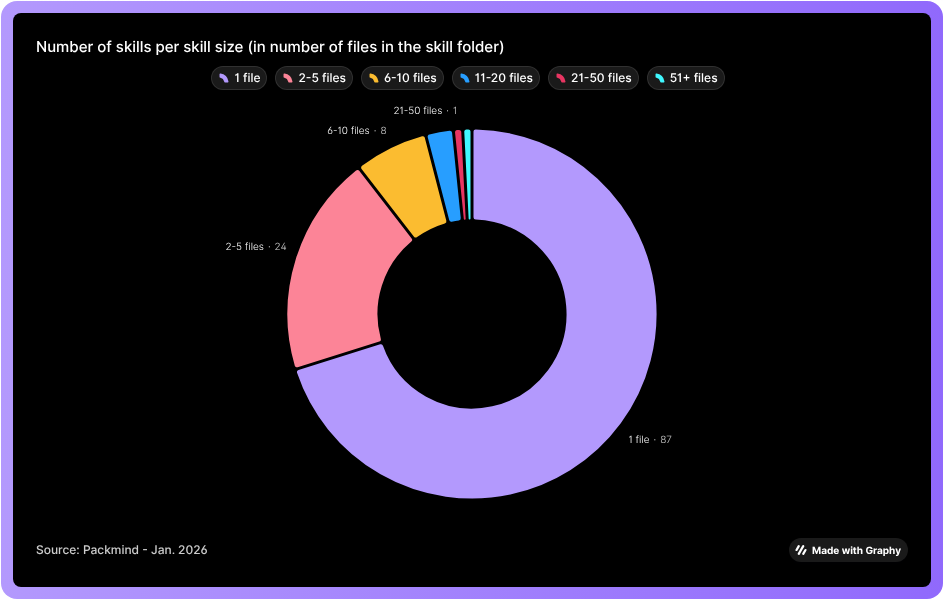

Across the 44 repos using Claude skills, I identified 124 unique skills. I wanted to see how many files were included in a skill folder (including files in sub-directories). As we can see, 87 skills (69.6 %) are only structured with a single SKILL.md file.

Conclusion

This analysis highlights a clear gap between the widespread use of AI coding agents and their explicit support in open-source projects. Only a small share of active repositories provide context-engineering artefacts, and most do so in a minimal, non-scoped way.

Claude Code currently leads adoption, with early signs that reusable constructs like skills and commands are gaining traction, while other agents remain marginal. Overall, context engineering in open source is still an early and uneven practice, but one that is likely to become increasingly crucial for project maintainability and contributor experience as agent-driven development continues to grow.

For open-source maintainers, this suggests a clear opportunity: investing even minimal, well-scoped context artefacts (starting with a single AGENTS.md or CLAUDE.md) can significantly improve contributor efficiency without adding long-term maintenance overhead.

One part of our mission at Packmind is to help engineering teams to create their first AI Agents artefacts to boostrap their context engineering strategy.