What We Learned From the Packmind × DX Webinar: The Real ROI of AI for Engineering Teams

Over the past year, AI coding assistants have moved from curiosity to critical infrastructure. Adoption is now over 90% among developers across the 436 companies studied, but adoption alone doesn’t guarantee results.

In our latest webinar with Justin Reock, Deputy CTO at DX, we explored what separates the engineering teams getting massive returns from those seeing only modest gains — or even negative impact.

Here are the three biggest insights that emerged from the data.

1️⃣ What’s Good for Humans Is Good for AI Agents

High-performing engineering fundamentals → the highest AI ROI

A major theme from the webinar:

AI doesn’t magically fix weak engineering practices. It amplifies them.

The data shows this clearly. Teams with strong development essentials get dramatically more from AI than teams with poor fundamentals. This aligns with both DORA and the DX research.

The three predictors of high AI impact:

1. Code Hygiene

Teams with clean architecture, consistent patterns, small PRs, and micro-commits give AI assistants the stable context they need to succeed.

This connects directly with the DORA findings showing that AI adoption is correlated with improvements in documentation quality (+7.5%), code quality (+3.4%), and reduced code complexity (–1.8%) .

2. DevOps Hygiene

CI/CD, test reliability, and consistent deployment workflows matter even more in an AI-augmented environment. Poor DevOps hygiene creates noise and slows down feedback loops — reducing the value of AI-accelerated coding.

3. Learning Culture

Teams that experiment, share context, and continuously refine their workflows outperform teams with top-down mandates. DX’s data also shows that top-down AI mandates decrease psychological safety and correlate with negative outcomes .

Bottom line:

AI pays off the most when teams already operate like high-performing engineering organizations.

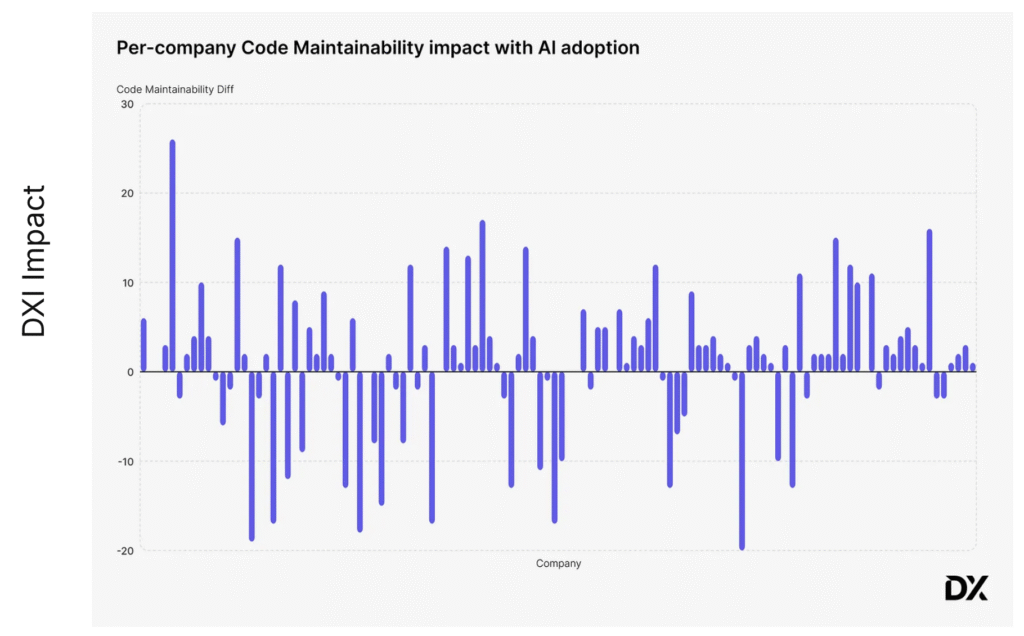

2️⃣ The Value of AI Is NOT Evenly Distributed

Industry averages hide a dramatic spread

AI’s impact looks modest when averaged across all companies:

- +2.6 points in Change Confidence (DXI)

- +2.2 points in Code Maintainability

- –0.11% change failure rate

But averages hide the real story.

Look at the per-company charts:

🟦 Some teams see huge gains: +20 to +30 DXI points in change confidence and maintainability.

🟥 Others see real declines: down –20 DXI points.

This variability is striking on the Change Failure Rate impact chart too:

Some companies reduce failures by up to 2%, while others increase failure rate by more than 2%.

Why such a spread?

Because AI is an amplifier:

- High-performing teams → get even faster, more confident, more maintainable.

- Low-performing teams → generate more chaos, more rework, and more hidden quality issues.

3️⃣ Measuring AI’s ROI Requires Maturity

Teams measure wildly different things, with wildly different rigor

During the webinar, Justin showed just how inconsistent measurement is across the industry. The DX AI Measurement Framework defines three pillars:

- Utilization

- Impact

- Cost

But companies vary dramatically in how they apply it.

High-maturity organizations measure: DAU/WAU (adoption), PR throughput (velocity), DXI and maintainability (quality), Engineering hours saved, % AI-assisted PRs, % AI lines added…

Low-maturity organizations usually focus on: tool usage only, time savings in isolation, AI lines added.

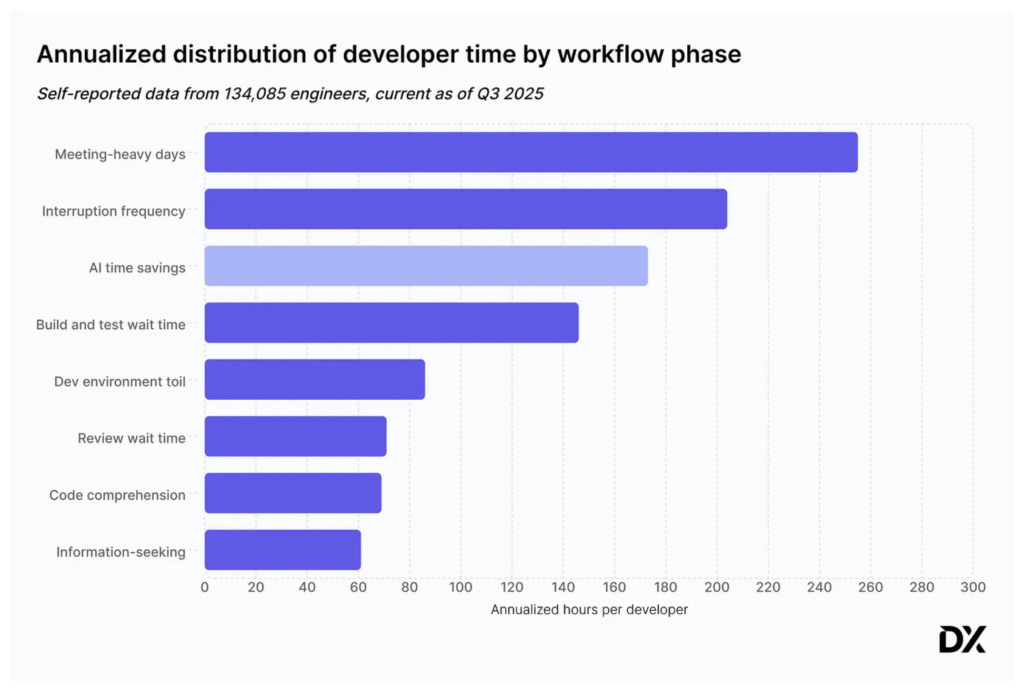

This contributes to the ROI variability we saw earlier. And as this slide illustrates, non-AI bottlenecks still dominate developer time: meetings, interruptions, environment issues, slow CI, debugging, context-switching.

If organizations don’t address these constraints, the ROI of AI-on-top is artificially capped.

🔄 AI still follows the basics — and context engineering is becoming the next essential

The biggest takeaway from the webinar is simple:

AI behaves like your best engineers — it performs best in structured, clean, context-rich environments.

This means the fundamentals still matter:

- Good architecture

- Clean PR habits

- Reliable tests

- Clear documentation

- Fast CI

- Healthy engineering culture

But the next frontier is emerging fast:

🌱 The Rise of Context Engineering

AI is becoming fully integrated into the SDLC, meaning teams now need to:

- Capture their engineering playbook

- Govern and version context

- Distribute standards across repos and agents

- Prevent context drift

- Ensure consistency across Claude, Cursor, Copilot, Kiro, etc.

This is ContextOps, and it’s quickly becoming a core discipline — just like DevOps became essential when software delivery accelerated.

Packmind was built for exactly this moment.

🎯 Final Thoughts

The Packmind × DX webinar made one thing crystal clear:

AI is not a shortcut — it’s a force multiplier. If you have strong engineering foundations, it amplifies excellence. If you don’t, it amplifies chaos.

And as coding assistants become ubiquitous, the companies that win will be the ones who master context engineering — ensuring that both humans and AI share the same standards, the same context, and the same workflow expectations.

If you want to bring this into your organization, explore Packmind open-source: